Swift 視頻錄制之小視頻拍攝,將多段視頻進行合並

我介紹了如何通過 AVFoundation.framework 框架提供的 AVCaptureSession 類來實現視頻的錄制。

當時的程序是點擊“開始”按鈕就開始視頻錄制,點擊“停止”則將視頻保存起來。整個視頻是連續地錄制,沒有時間限制。今天繼續在其基礎之上做個改進,實現小視頻拍攝功能。

1,小視頻拍攝要實現的功能

(1)視頻可以分段錄制。按住“錄像”按鈕,則開始捕獲攝像頭進行視頻錄制,放開按鈕則暫停錄制。

(2)所有視頻片段加起來的時間長度會有限制(本樣例限制為15秒)。錄制的時候頂部會有實時的進度條。

(3)點擊“保存”按鈕,或者總時長到達15秒時,則停止繼續錄像。程序會將各個視頻片段進行合並,並保存到系統相冊中。

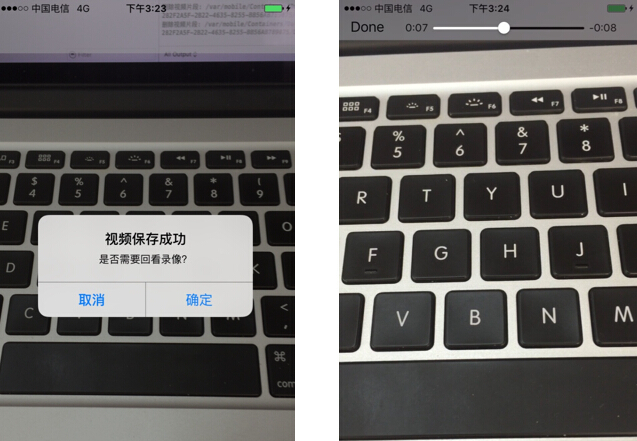

(4)保存成功後,可以回看生成的錄像。

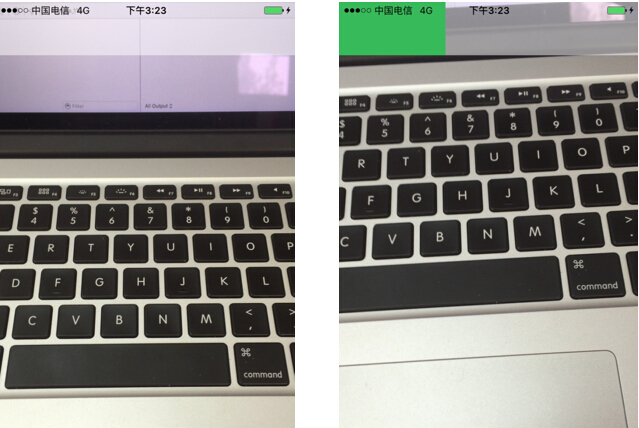

2,效果圖如下

3,實現原理

(1)每次按下按鈕錄制的視頻片段,同樣是使用 AVCaptureMovieFileOutput 輸出到 Documents 文件夾下,命名為 output-1.mov、output-2.mov....

(2)通過 AVMutableComposition 來拼接合成各個視頻片段的視頻、音頻軌道。

(3)使用 AVAssetExportSession 將合並後的視頻壓縮輸出成一個最終的視頻文件:mergeVideo-****.mov(本文使用高質量壓縮),並保存到系統相冊中去。

(4)通過 AVPlayerViewController 進行錄像的回看。

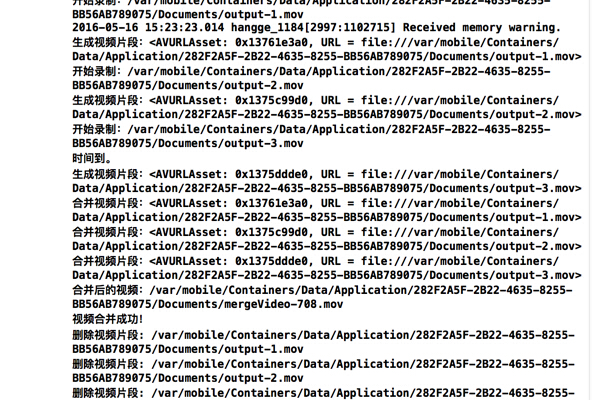

4,錄制一段由三個片段合成的視頻,控制台信息如下:

5,樣例代碼

import UIKit

import AVFoundation

import Photos

import AVKit

class ViewController: UIViewController , AVCaptureFileOutputRecordingDelegate {

//視頻捕獲會話。它是input和output的橋梁。它協調著intput到output的數據傳輸

let captureSession = AVCaptureSession()

//視頻輸入設備

let videoDevice = AVCaptureDevice.defaultDeviceWithMediaType(AVMediaTypeVideo)

//音頻輸入設備

let audioDevice = AVCaptureDevice.defaultDeviceWithMediaType(AVMediaTypeAudio)

//將捕獲到的視頻輸出到文件

let fileOutput = AVCaptureMovieFileOutput()

//錄制、保存按鈕

var recordButton, saveButton : UIButton!

//保存所有的錄像片段數組

var videoAssets = [AVAsset]()

//保存所有的錄像片段url數組

var assetURLs = [String]()

//單獨錄像片段的index索引

var appendix: Int32 = 1

//最大允許的錄制時間(秒)

let totalSeconds: Float64 = 15.00

//每秒幀數

var framesPerSecond:Int32 = 30

//剩余時間

var remainingTime : NSTimeInterval = 15.0

//表示是否停止錄像

var stopRecording: Bool = false

//剩余時間計時器

var timer: NSTimer?

//進度條計時器

var progressBarTimer: NSTimer?

//進度條計時器時間間隔

var incInterval: NSTimeInterval = 0.05

//進度條

var progressBar: UIView = UIView()

//當前進度條終點位置

var oldX: CGFloat = 0

override func viewDidLoad() {

super.viewDidLoad()

//添加視頻、音頻輸入設備

let videoInput = try! AVCaptureDeviceInput(device: self.videoDevice)

self.captureSession.addInput(videoInput)

let audioInput = try! AVCaptureDeviceInput(device: self.audioDevice)

self.captureSession.addInput(audioInput);

//添加視頻捕獲輸出

let maxDuration = CMTimeMakeWithSeconds(totalSeconds, framesPerSecond)

self.fileOutput.maxRecordedDuration = maxDuration

self.captureSession.addOutput(self.fileOutput)

//使用AVCaptureVideoPreviewLayer可以將攝像頭的拍攝的實時畫面顯示在ViewController上

let videoLayer = AVCaptureVideoPreviewLayer(session: self.captureSession)

videoLayer.frame = self.view.bounds

videoLayer.videoGravity = AVLayerVideoGravityResizeAspectFill

self.view.layer.addSublayer(videoLayer)

//創建按鈕

self.setupButton()

//啟動session會話

self.captureSession.startRunning()

//添加進度條

progressBar.frame = CGRect(x: 0, y: 0, width: self.view.bounds.width,

height: self.view.bounds.height * 0.1)

progressBar.backgroundColor = UIColor(red: 4, green: 3, blue: 3, alpha: 0.5)

self.view.addSubview(progressBar)

}

//創建按鈕

func setupButton(){

//創建錄制按鈕

self.recordButton = UIButton(frame: CGRectMake(0,0,120,50))

self.recordButton.backgroundColor = UIColor.redColor();

self.recordButton.layer.masksToBounds = true

self.recordButton.setTitle("按住錄像", forState: .Normal)

self.recordButton.layer.cornerRadius = 20.0

self.recordButton.layer.position = CGPoint(x: self.view.bounds.width/2,

y:self.view.bounds.height-50)

self.recordButton.addTarget(self, action: #selector(onTouchDownRecordButton(_:)),

forControlEvents: .TouchDown)

self.recordButton.addTarget(self, action: #selector(onTouchUpRecordButton(_:)),

forControlEvents: .TouchUpInside)

//創建保存按鈕

self.saveButton = UIButton(frame: CGRectMake(0,0,70,50))

self.saveButton.backgroundColor = UIColor.grayColor();

self.saveButton.layer.masksToBounds = true

self.saveButton.setTitle("保存", forState: .Normal)

self.saveButton.layer.cornerRadius = 20.0

self.saveButton.layer.position = CGPoint(x: self.view.bounds.width - 60,

y:self.view.bounds.height-50)

self.saveButton.addTarget(self, action: #selector(onClickStopButton(_:)),

forControlEvents: .TouchUpInside)

//添加按鈕到視圖上

self.view.addSubview(self.recordButton);

self.view.addSubview(self.saveButton);

}

//按下錄制按鈕,開始錄制片段

func onTouchDownRecordButton(sender: UIButton){

if(!stopRecording) {

let paths = NSSearchPathForDirectoriesInDomains(.DocumentDirectory,

.UserDomainMask, true)

let documentsDirectory = paths[0] as String

let outputFilePath = "\(documentsDirectory)/output-\(appendix).mov"

appendix += 1

let outputURL = NSURL(fileURLWithPath: outputFilePath)

let fileManager = NSFileManager.defaultManager()

if(fileManager.fileExistsAtPath(outputFilePath)) {

do {

try fileManager.removeItemAtPath(outputFilePath)

} catch _ {

}

}

print("開始錄制:\(outputFilePath) ")

fileOutput.startRecordingToOutputFileURL(outputURL, recordingDelegate: self)

}

}

//松開錄制按鈕,停止錄制片段

func onTouchUpRecordButton(sender: UIButton){

if(!stopRecording) {

timer?.invalidate()

progressBarTimer?.invalidate()

fileOutput.stopRecording()

}

}

//錄像開始的代理方法

func captureOutput(captureOutput: AVCaptureFileOutput!,

didStartRecordingToOutputFileAtURL fileURL: NSURL!,

fromConnections connections: [AnyObject]!) {

startProgressBarTimer()

startTimer()

}

//錄像結束的代理方法

func captureOutput(captureOutput: AVCaptureFileOutput!,

didFinishRecordingToOutputFileAtURL outputFileURL: NSURL!,

fromConnections connections: [AnyObject]!, error: NSError!) {

let asset : AVURLAsset = AVURLAsset(URL: outputFileURL, options: nil)

var duration : NSTimeInterval = 0.0

duration = CMTimeGetSeconds(asset.duration)

print("生成視頻片段:\(asset)")

videoAssets.append(asset)

assetURLs.append(outputFileURL.path!)

remainingTime = remainingTime - duration

//到達允許最大錄制時間,自動合並視頻

if remainingTime <= 0 {

mergeVideos()

}

}

//剩余時間計時器

func startTimer() {

timer = NSTimer(timeInterval: remainingTime, target: self,

selector: #selector(ViewController.timeout), userInfo: nil,

repeats:true)

NSRunLoop.currentRunLoop().addTimer(timer!, forMode: NSDefaultRunLoopMode)

}

//錄制時間達到最大時間

func timeout() {

stopRecording = true

print("時間到。")

fileOutput.stopRecording()

timer?.invalidate()

progressBarTimer?.invalidate()

}

//進度條計時器

func startProgressBarTimer() {

progressBarTimer = NSTimer(timeInterval: incInterval, target: self,

selector: #selector(ViewController.progress),

userInfo: nil, repeats: true)

NSRunLoop.currentRunLoop().addTimer(progressBarTimer!,

forMode: NSDefaultRunLoopMode)

}

//修改進度條進度

func progress() {

let progressProportion: CGFloat = CGFloat(incInterval / totalSeconds)

let progressInc: UIView = UIView()

progressInc.backgroundColor = UIColor(red: 55/255, green: 186/255, blue: 89/255,

alpha: 1)

let newWidth = progressBar.frame.width * progressProportion

progressInc.frame = CGRect(x: oldX , y: 0, width: newWidth,

height: progressBar.frame.height)

oldX = oldX + newWidth

progressBar.addSubview(progressInc)

}

//保存按鈕點擊

func onClickStopButton(sender: UIButton){

mergeVideos()

}

//合並視頻片段

func mergeVideos() {

let duration = totalSeconds

let composition = AVMutableComposition()

//合並視頻、音頻軌道

let firstTrack = composition.addMutableTrackWithMediaType(

AVMediaTypeVideo, preferredTrackID: CMPersistentTrackID())

let audioTrack = composition.addMutableTrackWithMediaType(

AVMediaTypeAudio, preferredTrackID: CMPersistentTrackID())

var insertTime: CMTime = kCMTimeZero

for asset in videoAssets {

print("合並視頻片段:\(asset)")

do {

try firstTrack.insertTimeRange(

CMTimeRangeMake(kCMTimeZero, asset.duration),

ofTrack: asset.tracksWithMediaType(AVMediaTypeVideo)[0] ,

atTime: insertTime)

} catch _ {

}

do {

try audioTrack.insertTimeRange(

CMTimeRangeMake(kCMTimeZero, asset.duration),

ofTrack: asset.tracksWithMediaType(AVMediaTypeAudio)[0] ,

atTime: insertTime)

} catch _ {

}

insertTime = CMTimeAdd(insertTime, asset.duration)

}

//旋轉視頻圖像,防止90度顛倒

firstTrack.preferredTransform = CGAffineTransformMakeRotation(CGFloat(M_PI_2))

//獲取合並後的視頻路徑

let documentsPath = NSSearchPathForDirectoriesInDomains(.DocumentDirectory,

.UserDomainMask,true)[0]

let destinationPath = documentsPath + "/mergeVideo-\(arc4random()%1000).mov"

print("合並後的視頻:\(destinationPath)")

let videoPath: NSURL = NSURL(fileURLWithPath: destinationPath as String)

let exporter = AVAssetExportSession(asset: composition,

presetName:AVAssetExportPresetHighestQuality)!

exporter.outputURL = videoPath

exporter.outputFileType = AVFileTypeQuickTimeMovie

exporter.shouldOptimizeForNetworkUse = true

exporter.timeRange = CMTimeRangeMake(

kCMTimeZero,CMTimeMakeWithSeconds(Float64(duration), framesPerSecond))

exporter.exportAsynchronouslyWithCompletionHandler({

//將合並後的視頻保存到相冊

self.exportDidFinish(exporter)

})

}

//將合並後的視頻保存到相冊

func exportDidFinish(session: AVAssetExportSession) {

print("視頻合並成功!")

let outputURL: NSURL = session.outputURL!

//將錄制好的錄像保存到照片庫中

PHPhotoLibrary.sharedPhotoLibrary().performChanges({

PHAssetChangeRequest.creationRequestForAssetFromVideoAtFileURL(outputURL)

}, completionHandler: { (isSuccess: Bool, error: NSError?) in

dispatch_async(dispatch_get_main_queue(),{

//重置參數

self.reset()

//彈出提示框

let alertController = UIAlertController(title: "視頻保存成功",

message: "是否需要回看錄像?", preferredStyle: .Alert)

let okAction = UIAlertAction(title: "確定", style: .Default, handler: {

action in

//錄像回看

self.reviewRecord(outputURL)

})

let cancelAction = UIAlertAction(title: "取消", style: .Cancel,

handler: nil)

alertController.addAction(okAction)

alertController.addAction(cancelAction)

self.presentViewController(alertController, animated: true,

completion: nil)

})

})

}

//視頻保存成功,重置各個參數,准備新視頻錄制

func reset() {

//刪除視頻片段

for assetURL in assetURLs {

if(NSFileManager.defaultManager().fileExistsAtPath(assetURL)) {

do {

try NSFileManager.defaultManager().removeItemAtPath(assetURL)

} catch _ {

}

print("刪除視頻片段: \(assetURL)")

}

}

//進度條還原

let subviews = progressBar.subviews

for subview in subviews {

subview.removeFromSuperview()

}

//各個參數還原

videoAssets.removeAll(keepCapacity: false)

assetURLs.removeAll(keepCapacity: false)

appendix = 1

oldX = 0

stopRecording = false

remainingTime = totalSeconds

}

//錄像回看

func reviewRecord(outputURL: NSURL) {

//定義一個視頻播放器,通過本地文件路徑初始化

let player = AVPlayer(URL: outputURL)

let playerViewController = AVPlayerViewController()

playerViewController.player = player

self.presentViewController(playerViewController, animated: true) {

playerViewController.player!.play()

}

}

}

- iOS10告訴框架UserNotification懂得與運用

- iOS Webview自順應現實內容高度的4種辦法詳解

- iOS10 App適配權限 Push Notifications 字體Frame 碰到的成績

- iOS獲得以後裝備WiFi信息的辦法

- iPhone/iPad開辟經由過程LocalNotification完成iOS准時當地推送功效

- iOS推送之當地告訴UILocalNotification

- iOS開辟之widget完成詳解

- iOS10添加當地推送(Local Notification)實例

- iOS Remote Notification長途新聞推送處置

- IOS上iframe的轉動條掉效的處理方法

- Swift 同享文件操作小結(iOS 8 +)

- Swift 2.1 為 UIView 添加點擊事宜和點擊後果

- 在Swift中應用JSONModel 實例代碼

- 應用Swift代碼完成iOS手勢解鎖、指紋解鎖實例詳解

- 詳解iOS App中UISwitch開關組件的根本創立及應用辦法